~ 4 min read

ell - An Elegant, Powerful Framework for Prompt Engineering

If you interact with a large language model in your software, you’re probably going to end up writing a some degree of boilerplate to deal with making requests, parsing responses etc. Stuff that someone, somewhere has already figured out a better solution for. Maybe you’ve looked into langchain but felt it was a bit overkill, or found the docs hard to follow?

Introducing ell

ell is recent solution from William Guss (prev of OpenAI) which provides a rich set of developer tooling around making requests to your choice of large language model. This includes both making simple requests complete with a ui for monitoring and visualising them and their responses.

Here’s what a request written to gpt-4o-mini with Ell looks like. If you’ve got your openai key set as an environment variable (OPENAI_API_KEY), this will just work out of the box.

import ell

@ell.simple(model="gpt-4o-mini")

def write_a_tip(name : str, temperature=0.3):

"""You are a helpful assistant.""" # System Message

return f"Write a python tip for a developer named {name}." # User Message

print(write_a_tip("Ian"))Ell calls to these functions Language Model Programs (or LMP’s). They’re a core tenet of how things work. Notice the use of the @ell.simple decorator, which refers to a simple text response, into which we pass the model we want to use.

Compare that to how you might do the same thing with openai’s default client. We have to pass everything as separate parameters and dig through the message structure to find the llms response.

@ell.complex

Ell also provides another decorator @ell.complex for structured outputs, multimodal content, calling tools and maintaining chat history. Here’s an example from their docs using the same pydantic objects as you would with the openai library but with a much more concise function call.

import ell

from pydantic import BaseModel, Field

class MovieReview(BaseModel):

title: str = Field(description="The title of the movie")

rating: int = Field(description="The rating of the movie out of 10")

summary: str = Field(description="A brief summary of the movie")

@ell.complex(model="gpt-4o-2024-08-06", response_format=MovieReview)

def generate_movie_review(movie: str):

"""You are a movie review generator. Given the name of a movie, you need to return a structured review."""

return f"Generate a review for the movie {movie}"

review_message = generate_movie_review("The Matrix")

review = review_message.parsed

print(f"Movie: {review.title}, Rating: {review.rating}/10")

print(f"Summary: {review.summary}")NB: If you don’t like the way docstrings are being used for the system messages, you can be a bit more explicit about the way things are written, using ell.system or ell.user:

import ell

@ell.simple(model="gpt-4o-mini")

def write_a_tip(name : str, temperature=0.3):

return [

ell.system("You are a helpful assistant."),

ell.user(f"Write a python tip for a developer named {name}.")

]Monitoring Changes

Ell comes complete with a way of logging requests/responses to an sqlite db through a simple change. Every request you make always hold value as part of an experiment, so tracking the changes you’re making is viewed as really important. Each change we make to the LMP is tracked when invoked and we even get a commit log of descriptive changes (generated using gpt-4o-mini). We can do that by initialising a script to log to a store:

import ell

ell.init(store="./logdir", autocommit=True)

...Ell Studio

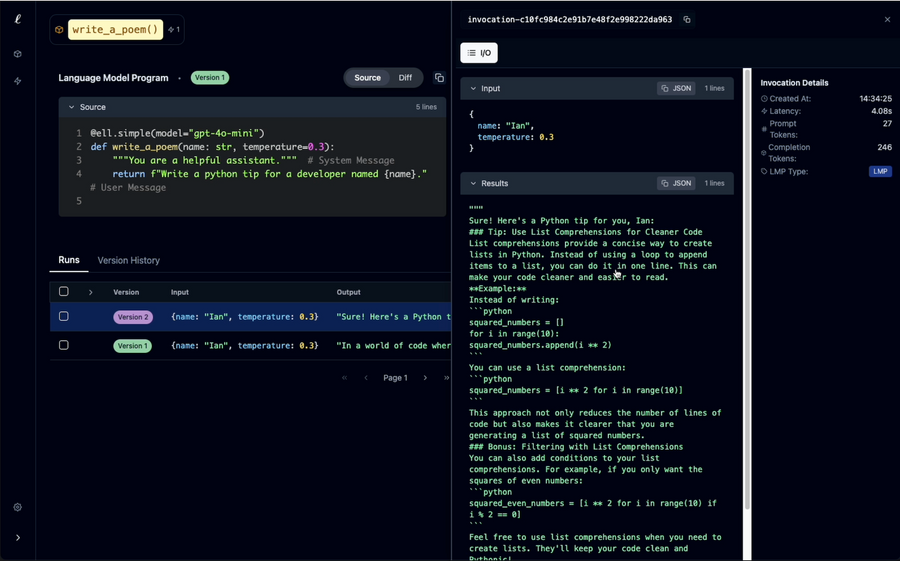

Where ell really shines through is it’s ‘studio’ - a web app to visualise the changes captured to the db. Here we’re able to visually see changes we’ve made. You can start it by pointing to the store directory we initialised above:

ell-studio --storage ./logdirWhen we look at the app running on port 5000, we’ll be able to inspect all the requests we’re making, along with detailed info like the number of tokens used. We can also see automatic version generation of our changes and the diffs between them.

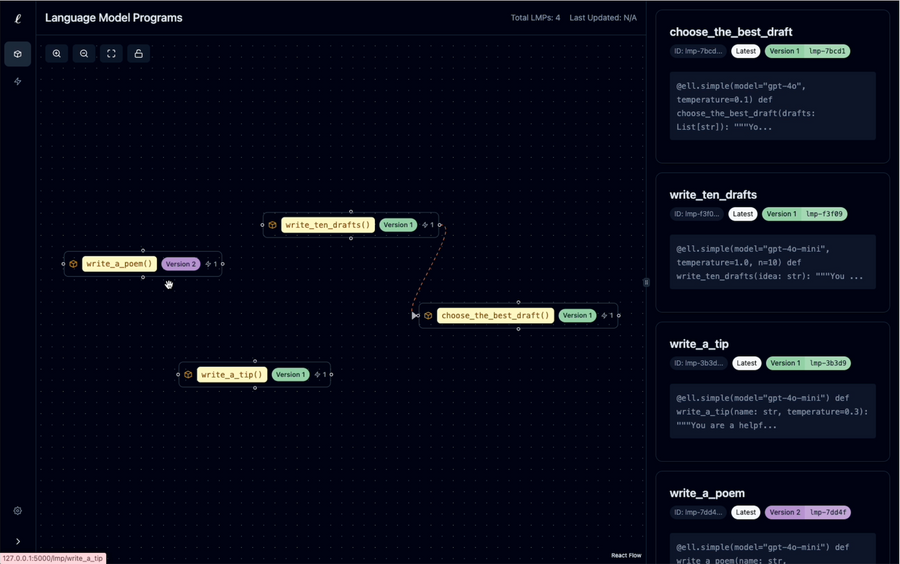

We also get a great node based view for navigating to each of our LMP’s. If we chain queries together as part of our program, we’ll see it displayed visually here.

I think you’ll agree if you’re using LLM’s through an API that this sort of tooling really does help a lot whilst we’re experimenting. Not having to develop it ourselves is awesome. If you’re interested in hearing my full thoughts, I also made this video introducing ell over on my youtube channel.